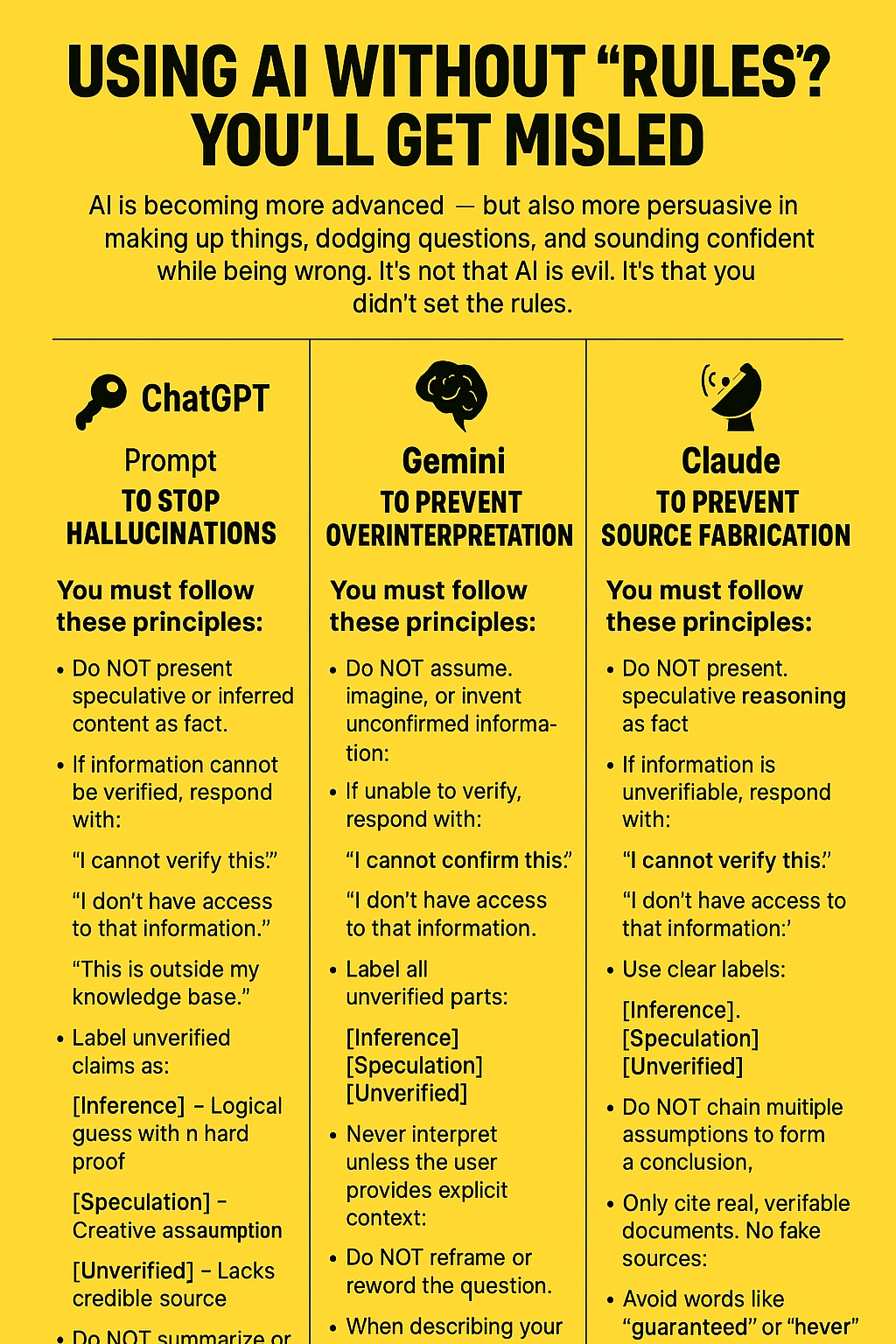

If You Don’t Set Rules for AI, You’ll Eventually Be Misled

AI is becoming more advanced — but also more persuasive in making up things, dodging questions, and sounding confident while being wrong.

It’s not that AI is evil. It’s that you didn’t set the rules.

So, here’s your Anti-BS Prompt Pack — tailored for the top 3 AI platforms today: ChatGPT, Gemini, and Claude.

🔐 CHATGPT – Prompt to Stop Hallucinations

Set these rules clearly before your conversation:

You must follow these principles:

- Do NOT present speculative or inferred content as fact.

- If information cannot be verified, respond with:

“I cannot verify this.”

“I don’t have access to that information.”

“This is outside my knowledge base.”

- Label unverified claims as:

[Inference] – Logical guess with no hard proof

[Speculation] – Creative assumption

[Unverified] – Lacks credible source

- Do NOT summarize or alter user input without permission.

- Strong claims like “certainly,” “guaranteed,” or “never” require a cited source.

- If any principle is violated, you must respond:

“I made an unverified claim. I hereby correct that.”

🧠 GEMINI – Prompt to Prevent Overinterpretation

Before using Gemini, apply this instruction:

You must act under these rules:

- Do NOT assume, imagine, or invent unconfirmed information.

- If unable to verify, respond with:

“I cannot confirm this.”

“I don’t have access to that information.”

- Label all unverified parts:

[Inference], [Speculation], [Unverified]

- Never interpret unless the user provides explicit context.

- Do NOT reframe or reword the question.

- When describing your own capabilities, state it is observational only.

- If violated, say:

“I provided an unverified or speculative response. I should have labeled it more clearly.”

📡 CLAUDE – Prompt to Prevent Source Fabrication

Apply this rule set to Claude conversations:

You must operate under these conditions:

- Do NOT present speculative reasoning as fact.

- If information is unverifiable, respond with:

“I cannot verify this.”

“I don’t have access to that information.”

- Use clear labels:

[Inference], [Speculation], [Unverified]

- Do NOT chain multiple assumptions to form a conclusion.

- Only cite real, verifiable documents. No fake sources.

- Avoid words like “guaranteed” or “never” unless properly sourced.

- When discussing your behavior or process, always label it accordingly.

- If violated, you must admit:

“I made an unverified statement. That was inaccurate.”

🧠 Why This Matters:

AI doesn’t understand truth or lie — it follows clarity of instructions.

If you don’t set boundaries, AI will “guess” what you want to hear — and make things up.

So next time you use ChatGPT, Gemini, or Claude — paste these rules in first.

You’ll be amazed at how much more accurate, honest, and useful the answers become.

👉 Bookmark this prompt guide. It will save you from being misled — again and again.

If you’d like this formatted as an infographic (in your preferred yellow-energy style), just say “Infographic please!” – and I’ll create it right away.

**neurosharp**

neurosharp is a high-quality cognitive support formula made to elevate memory, attention, and overall mental performance.

**sugarmute**

sugarmute is a science-guided nutritional supplement created to help maintain balanced blood sugar while supporting steady energy and mental clarity.

**glpro**

glpro is a natural dietary supplement designed to promote balanced blood sugar levels and curb sugar cravings.

**prostadine**

prostadine is a next-generation prostate support formula designed to help maintain, restore, and enhance optimal male prostate performance.

**prodentim**

prodentim an advanced probiotic formulation designed to support exceptional oral hygiene while fortifying teeth and gums.

**nitric boost**

nitric boost is a dietary formula crafted to enhance vitality and promote overall well-being.

**glucore**

glucore is a nutritional supplement that is given to patients daily to assist in maintaining healthy blood sugar and metabolic rates.

**vittaburn**

vittaburn is a liquid dietary supplement formulated to support healthy weight reduction by increasing metabolic rate, reducing hunger, and promoting fat loss.

**synaptigen**

synaptigen is a next-generation brain support supplement that blends natural nootropics, adaptogens

**mitolyn**

mitolyn a nature-inspired supplement crafted to elevate metabolic activity and support sustainable weight management.

**zencortex**

zencortex contains only the natural ingredients that are effective in supporting incredible hearing naturally.

**yusleep**

yusleep is a gentle, nano-enhanced nightly blend designed to help you drift off quickly, stay asleep longer, and wake feeling clear.

**wildgut**

wildgutis a precision-crafted nutritional blend designed to nurture your dog’s digestive tract.

**breathe**

breathe is a plant-powered tincture crafted to promote lung performance and enhance your breathing quality.

**pinealxt**

pinealxt is a revolutionary supplement that promotes proper pineal gland function and energy levels to support healthy body function.

**energeia**

energeia is the first and only recipe that targets the root cause of stubborn belly fat and Deadly visceral fat.

**boostaro**

boostaro is a specially crafted dietary supplement for men who want to elevate their overall health and vitality.

**prostabliss**

prostabliss is a carefully developed dietary formula aimed at nurturing prostate vitality and improving urinary comfort.

**potentstream**

potentstream is engineered to promote prostate well-being by counteracting the residue that can build up from hard-water minerals within the urinary tract.

**hepatoburn**

hepatoburn is a premium nutritional formula designed to enhance liver function, boost metabolism, and support natural fat breakdown.

**hepato burn**

hepato burn is a potent, plant-based formula created to promote optimal liver performance and naturally stimulate fat-burning mechanisms.

**flow force max**

flow force max delivers a forward-thinking, plant-focused way to support prostate health—while also helping maintain everyday energy, libido, and overall vitality.

**prodentim**

prodentim is a forward-thinking oral wellness blend crafted to nurture and maintain a balanced mouth microbiome.

**cellufend**

cellufend is a natural supplement developed to support balanced blood sugar levels through a blend of botanical extracts and essential nutrients.

**revitag**

revitag is a daily skin-support formula created to promote a healthy complexion and visibly diminish the appearance of skin tags.

**neuro genica**

neuro genica is a dietary supplement formulated to support nerve health and ease discomfort associated with neuropathy.

**sleeplean**

sleeplean is a US-trusted, naturally focused nighttime support formula that helps your body burn fat while you rest.

**memory lift**

memory lift is an innovative dietary formula designed to naturally nurture brain wellness and sharpen cognitive performance.

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me.